Folks,

Everyday, we are exposed to the results of surveys and polls. A typical example might be that President Obama is leading Mitt Romney in a poll by 48% to 45%, but the results are not statistically significant. A reasonable question might be, “What does it mean to be statistically significant ?”

To determine statistical significance, typically, the statistician will use the criteria that if there is only a 5 percent or less chance that the conclusion would be wrong, it is considered statistically significant. So, when another poll would state that President Obama leads by 49% to 44% and it is statistically significant, there is, statistically, less than a 5 % chance that the conclusion is wrong. The 5 % criteria is not cast in concrete. Sometimes 10%, 1%, or even 0.1% might be used. However, tradition has given us 5% as the default value for “statistically significance.” It is also helpful to understand that, the more data points in the sample, the more likely the results will be statistically significant.

But if some data are statistically significant, is it always "practically" significant? As an example, let’s say that you really like chocolate. Your favorite brand is in a taste test and it scores 9.6 out of 10, whereas a new chocolate scores 9.7/10 and the results are statistically significant. On the downside, the new chocolate costs 5 times as much. Is it worth the extra money to convert to the new chocolate? In this case, we have to ask, is the difference practically significant. The answer is, in all likelihood, no. Such a difference as 0.1 point out of 10 is very small, and taste is also subjective. Here, the result might not be practically significant. The subjectiveness of a taste test may mean that you either can’t tell the difference or that you still like your favorite chocolate the best.

Let’s consider another less subjective example. Suppose that, in a certain application, solder voiding is a critical concern. So, you measure the voiding of two solder pastes. After collecting hundreds of data points, you find that the average voiding of one solder paste is 8% and that of the other is 7%. Analysis with Mintab® software tells you that the difference is statistically significant. But is the difference practically significant? Probably not.

How do you determine practical significance? Typically it would be by experimentation or in some cases by experience. In our example of solder voiding, suppose experiments showed that, as long as the voiding average is below 30%, there will be no concerns. In light of this, engineering may have set a specification that voiding must not be greater than 25% on average. (All of this discussion assumes that the spread or standard deviation of the data is not large, but this subject is the topic of another discussion.) So, in this case, the difference between 7 and 8 percent voiding may be statistically significant, but not practically significant. So, a prudent engineer may select the 8% paste if it had other desirable features, such as better response to pause, or resistance to graping, or improved head-in-pillow defect.

So always ask yourself, is the difference both statistical and practical.

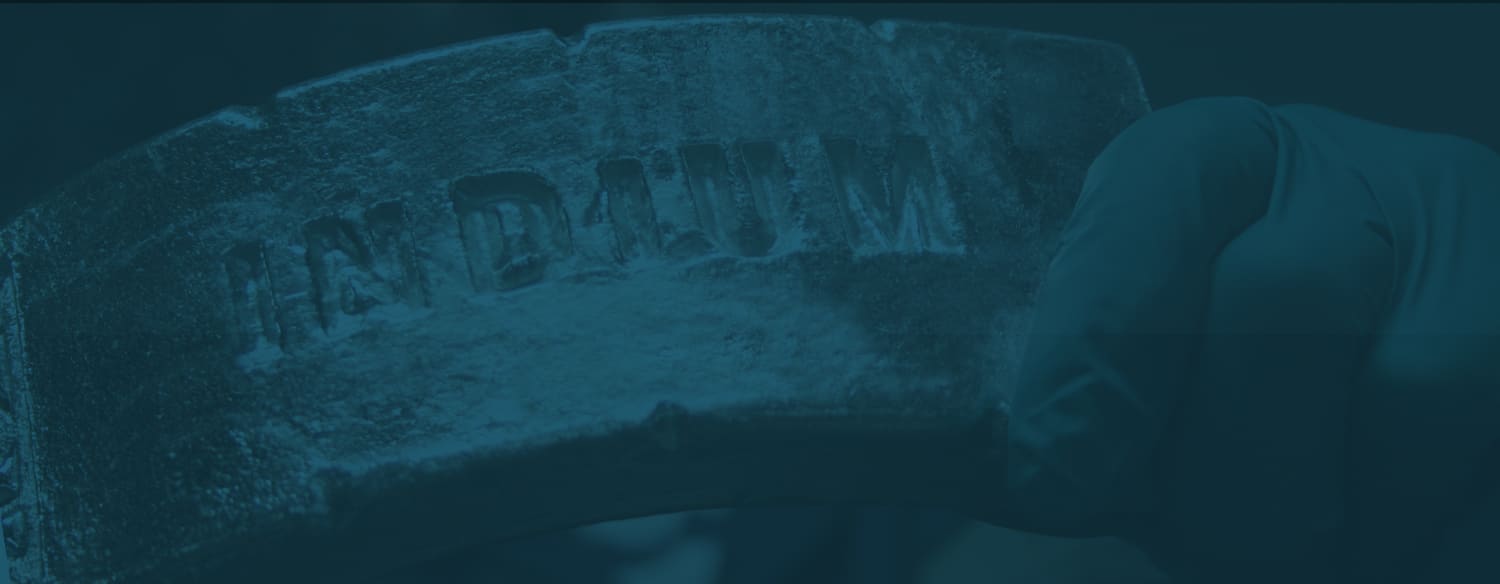

The image shows solder joint graping, which is often more of a concern than voiding.

Cheers,

Dr. Ron